Beginner Tutorial: Querying Semantics

This tutorial will show you how to examine the semantic buffer to find out what is on screen, e.g. which pixels have grass, are there any buildings etc. We will build a very simple mini-game using these functions.

Note

The mocking system demonstrated in the video has been updated in ARDK 1.3. For details on mock mode changes see the additional Mock Mode video.

Preparation

This tutorial assumes you have a working Unity scene with the ARDK imported. Please refer to the Getting Started With ARDK page for more details on how to import the ARDK package.

Steps

Create a new scene.

Create a new folder in your

Assettree calledBasicSemantics.

Create a new scene in that folder. Right-click in the folder and select Create > Scene. Name it

BasicSemanticsTutorial.

Add the ARDK managers.

Add the following managers to the camera object:

AR Session Manager

AR Camera Position Helper

AR Rendering Manager

AR Semantic Segmentation Manager

Make sure that the camera variable is set to the scene camera on all of the managers.

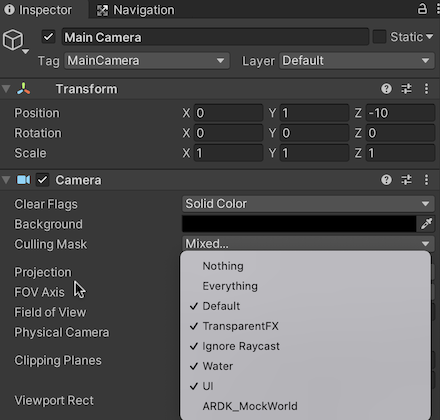

Update the camera settings.

Set the camera background to black background.

Set Culling Mask to ignore the ARDK mock world.

Set up semantic mocking so we can test in the Unity editor. When we’re testing our Unity project in the Unity editor, and not on a device, we’ll use Playing in Mock Mode to simulate an environment with semantic channels.

Download the ARDK Mock Environments package from the ARDK Downloads page.

Import the package into your Unity project.

In the Lightship > ARDK > Virtual Studio window, go to the Mock tab and select the

ParkPondprefab from the Mock Scene dropdown. This mock environment has several objects set up to use different emantic channels. The prefab will automatically be instantiated in your scene when you run in the Unity editor.

Set up a script to get the semantic buffer. We will make a script that takes in the semantic manager and catches a callback when the semantic buffer is updated. For this tutorial we’ll add the script to the camera to keep things simple. You can add the script to any game object in the scene.

Our QuerySemantics script:

using System; using System.Collections; using System.Collections.Generic; using UnityEngine; using Niantic.ARDK.AR using Niantic.ARDK.Utilities; using Niantic.ARDK.Utilities.Input.Legacy; using Niantic.ARDK.AR.:ARSessionEventArgs; using Niantic.ARDK.AR.:Configuration; using Niantic.ARDK.AR.Awareness; using Niantic.ARDK.AR.Awareness.Semantics; using Niantic.ARDK.Extensions; public class QuerySemantics : MonoBehaviour { ISemanticBuffer _currentBuffer; public ARSemanticSegmentationManager _semanticManager; public Camera _camera; void Start() { //add a callback for catching the updated semantic buffer _semanticManager.SemanticBufferUpdated += OnSemanticsBufferUpdated; } private void OnSemanticsBufferUpdated(ContextAwarenessStreamUpdatedArgs<ISemanticBuffer> args) { //get the current buffer _currentBuffer = args.Sender.AwarenessBuffer; } // Update is called once per frame void Update() { }

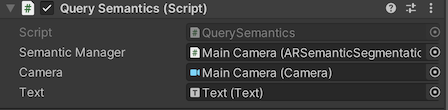

QuerySemantics script in the editor:

Modify Update to get touch input and use the semantics buffer to determine what is present at that point. This script will catch the touch input event and then query the buffer about that touch location. That information is then output to the console.

void Update() { if (PlatformAgnosticInput.touchCount <= 0) { return; } var touch = PlatformAgnosticInput.GetTouch(0); if (touch.phase == TouchPhase.Began) { //list the channels that are available Debug.Log("Number of Channels available " + _semanticManager.SemanticBufferProcessor.ChannelCount); foreach (var c in _semanticManager.SemanticBufferProcessor.Channels) Debug.Log(c); int x = (int)touch.position.x; int y = (int)touch.position.y; //return the indices int[] channelsInPixel = _semanticManager.SemanticBufferProcessor.GetChannelIndicesAt(x, y); //print them to console foreach (var i in channelsInPixel) Debug.Log(i); //return the names string[] channelsNamesInPixel = _semanticManager.SemanticBufferProcessor.GetChannelNamesAt(x, y); //print them to console foreach (var i in channelsNamesInPixel) Debug.Log(i); } } }

7. Add additional calls to inspect the semantic buffer. The ISemanticBufferProvider contains a number of useful functions for interrogating the buffers. These functions allow you to check what is in any given pixel on the screen. The example code below shows how to use each function. As you click on the screen it will print a bunch of information to the console about the pixel you have selected and also check to see if it matches a channel you are looking for.

// Update is called once per frame void Update() { if (PlatformAgnosticInput.touchCount <= 0) { return; } var touch = PlatformAgnosticInput.GetTouch(0); if (touch.phase == TouchPhase.Began) { int x = (int)touch.position.x; int y = (int)touch.position.y; DebugLogSemanticsAt(x, y); } } //examples of all the functions you can use to interogate the procider/biffers void DebugLogSemanticsAt(int x, int y) { //list the channels that are available Debug.Log("Number of Channels available " + _semanticManager.SemanticBufferProcessor.ChannelCount); foreach (var c in _semanticManager.SemanticBufferProcessor.Channels) Debug.Log(c); Debug.Log("Chanel Indexes at this pixel"); int[] channelsInPixel = _semanticManager.SemanticBufferProcessor.GetChannelIndicesAt(x, y); foreach (var i in channelsInPixel) Debug.Log(i); Debug.Log("Chanel Names at this pixel"); string[] channelsNamesInPixel = _semanticManager.SemanticBufferProcessor.GetChannelNamesAt(x, y); foreach (var i in channelsNamesInPixel) Debug.Log(i); bool skyExistsAtWithIndex = _semanticManager.SemanticBufferProcessor.DoesChannelExistAt(x, y, 0); bool skyExistsAtWithName = _semanticManager.SemanticBufferProcessor.DoesChannelExistAt(x, y, "sky"); Debug.Log("Does This pixel have sky using index: " + skyExistsAtWithIndex); Debug.Log("Does This pixel have sky using name: " + skyExistsAtWithName); //check if a channel exists anywhere on the screen. Debug.Log("Does the screen contain sky using index " + _currentBuffer.DoesChannelExist(0)); Debug.Log("Does the screen contain grounf using index " + _currentBuffer.DoesChannelExist(1)); Debug.Log("Does the screen contain sky using name " + _currentBuffer.DoesChannelExist("sky")); Debug.Log("Does the screen contain grounf using name " + _currentBuffer.DoesChannelExist("ground")); //if you want to do any masking yourself. UInt32 packedSemanticPixel = _semanticManager.SemanticBufferProcessor.GetSemantics(x, y); UInt32 maskFromIndex = _currentBuffer.GetChannelTextureMask(0); UInt32 maskFromString = _currentBuffer.GetChannelTextureMask("sky"); Debug.Log("packedSemanticPixel " + packedSemanticPixel); Debug.Log("sky mask from index " + maskFromIndex); Debug.Log("sky mask from name " + maskFromString); //manually masking things. if ((packedSemanticPixel & maskFromIndex) > 0) { Debug.Log("manually using bit mask this pixel is sky"); } int[] channelIndices = { 0, 1 }; UInt32 maskForMultipleChannelsFromIndices = _currentBuffer.GetChannelTextureMask(channelIndices); Debug.Log("Combined bit mask for sky and ground using indices " + maskForMultipleChannelsFromIndices); string[] channelNames = { "ground", "sky" }; UInt32 maskForMultipleChannelsFromName = _currentBuffer.GetChannelTextureMask(channelNames); Debug.Log("Combined bit mask for sky and ground using strings " + maskForMultipleChannelsFromName); }

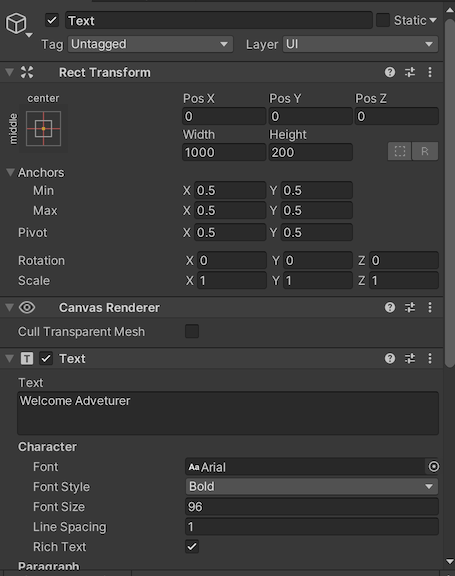

Create a simple game that asks the user to find a particular item: grass, wood, water, clouds, etc. Our game will have the user search for a randomized set of item types, with a limited amount of time to find them. First let’s add some on-screen text rather than using Debug.Log statements.

Add a Text object to put our text on screen. Right-click in your scene root and select Game Object > UI > Text.

Pass this into your script as a public variable:

public class QuerySemantics : MonoBehaviour { ISemanticBuffer _currentBuffer; public ARSemanticSegmentationManager _semanticManager; public Camera _camera; //pass in our text object public Text _text; // ... }

Add game logic to our script. We will add a timer to randomly pick items to find from a list. We’ll use a simple win condition of finding X items.

using System; using System.Collections; using System.Collections.Generic; using UnityEngine; using Niantic.ARDK.AR using Niantic.ARDK.Utilities; using Niantic.ARDK.Utilities.Input.Legacy; using Niantic.ARDK.AR.:ARSessionEventArgs; using Niantic.ARDK.AR.:Configuration; using Niantic.ARDK.AR.Awareness; using Niantic.ARDK.AR.Awareness.Semantics; using Niantic.ARDK.Extensions; public class QuerySemantics : MonoBehaviour { ISemanticBuffer _currentBuffer; public ARSemanticSegmentationManager _semanticManager; public Camera _camera; public Text _text; void Start() { //add a callback for catching the updated semantic buffer _semanticManager.SemanticBufferUpdated += OnSemanticsBufferUpdated; } private void OnSemanticsBufferUpdated(ContextAwarenessStreamUpdatedArgs<ISemanticBuffer> args) { //get the current buffer _currentBuffer = args.Sender.AwarenessBuffer; } //our list of possible items to find. string[] items = { "grass", "water", "foliage" }; //timer for how long the user has to find the current item float _findTimer = 0.0f; //cooldown timer for how long to wait before issuing another find item request float _waitTimer = 2.0f; //our score to track for our win condition int _score = 0; //the current item we are looking for string _thingToFind = ""; //if we found the item this frame. bool _found = true; //function to pick a randon item to fetch from the list void PickRandomItemToFind() { int randCh = (int)UnityEngine.Random.Range(0.0f, 3.0f); _thingToFind = items[randCh]; _findTimer = UnityEngine.Random.Range(5.0f, 10.0f); _found = false; } // Update is called once per frame void Update() { //our win condition you found 5 things if (_score > 5) { _text.text = "Now that I have all of the parts I can make your quest reward. +2 vorpal shoulderpads!"; return; } //tick down our timers _findTimer -= Time.deltaTime; _waitTimer -= Time.deltaTime; //wait here inbetween quests for a bit if (_waitTimer > 0.0f) return; //the alloted time to find an item is expired if (_findTimer <= 0.0f) { //fail condition if we did not find it. if (_found == false) { _text.text = "Quest failed"; _waitTimer = 2.0f; _found = true; return; } //otherwise pick a new thing to find PickRandomItemToFind(); _text.text = "Hey there adventurer could you find me some " + _thingToFind; } //input functions if (PlatformAgnosticInput.touchCount <= 0) { return; } var touch = PlatformAgnosticInput.GetTouch(0); if (touch.phase == TouchPhase.Began) { //get the current touch position int x = (int)touch.position.x; int y = (int)touch.position.y; //ask the semantic manager if the item we are looking for is in the selected pixel if (_semanticManager.SemanticBufferProcessor.DoesChannelExistAt(x, y, _thingToFind)) { //if so then trigger success for this request and reset timers _text.text = "Thanks adventurer, this is just what i was looking for!"; _findTimer = 0.0f; _waitTimer = 2.0f; _score++; _found = true; } else { //if not look at what is in that pixel and give the user some feedback string[] channelsNamesInPixel = _semanticManager.SemanticBufferProcessor.GetChannelNamesAt(x, y); string found; if (channelsNamesInPixel.Length > 0) found = channelsNamesInPixel[0]; else found = "thin air"; _text.text = "Nah thats " + found + ", i was after " + _thingToFind; } } } }

Test in the Unity editor, and optionally make a more natural test environment. Feel free to add more objects to the ParkPond prefab to make the test more interesting. You could set up some boxes for trees & buildings, and then use planes for the ground, water etc. Or even import one of your existing environments to annotate.

Test on your device. We have tested the logic in Unity using the mocking system, so there shouldn’t be any unexpected behavior when we run on your phone. You may need to increase the amount of time the player gets to find something, since the player will need to move around their environment while searching.